![[object Object]](/_next/image?url=https%3A%2F%2Fcdn.sanity.io%2Fimages%2Fei1axngy%2Fproduction%2F3a4ea834e9e76123cb06feba2125a9656ec6f097-450x450.jpg&w=256&q=75)

by Dr Seán Carroll

Updated 28 March 2023

Generative AI is Changing the World

![[object Object]](/_next/image?url=https%3A%2F%2Fcdn.sanity.io%2Fimages%2Fei1axngy%2Fproduction%2Fffd31dfbb67ff071a05218daa6658b85fbb45ea8-800x448.png&w=2048&q=75)

March 2023 - the month everything changed

It’s fair to say that we’re living through an explosion in the use of what I’d call consumer-facing AI. For the first time, the power of generative AI is breaking through into the wider public consciousness. This started to happen in the summer of 2020, with the release of GPT-3, the third generation of OpenAI’s generative Pre-trained Transformer (GPT) model.

Things really blew up in November 2022 with the release of ChatGPT - in terms of mass public awareness of generative AI, this release was an inflection point. Since then, we’ve seen releases of ever more advanced models at breakneck speed. At the time of writing, in the last two weeks:

- Microsoft and Google have announced AI integrations for their suites of office tools

- Google released an API to its large language model (LLM), PaLM

- Google broadened access to Bard, its answer to OpenAI’s ChatGPT

- OpenAI released GPT-4, a significantly more advanced upgrade to ChatGPT

- OpenAI also announced Plugins, the ability to augment ChatGPT with additional functionality, e.g. searching the web, performing mathematics and even executing (note just writing) code.

- GitHub announced Copilot X, a power boost for their code completion extension Copilot, again powered by OpenAI’s models.

- Adobe released its own AI image generator, Fire Fly - bringing generative AI directly into the creator tool pipeline.

- Midjourney released the 5th generation of their image-generating model with significantly enhanced photorealism.

Remember, this all happened within the last two weeks! Lenin’s famous quote, 'there are decades where nothing happens and there are weeks where decades happen', could hardly be more relevant. Consider where we could be by the end of the year.

This technology is having a profound impact on how knowledge and creative work are performed. Developers and digital artists are among the first to feel the ground shift beneath their feet.

As someone who spends a large portion of my working day writing code, I’ve seen my workflow evolve more in the last three months than in the previous 3 years. No one can really tell what the role of a developer will actually look like in, say, 12 or 18 months. It’s a thought that’s equal parts exciting and unnerving!

digiLab as a company, uses machine learning and data science to develop first-of-a-kind solutions, specialising in safety-critical industries. The team are very comfortable exploring the underlying technologies that power many of the product releases I outlined above.

Having said that, we’re also keen to make use of the latest models and tools to capture some efficiencies in our own workflows. Recently, we’ve used Midjourney to help generate the course images for our collection of AI in the Wild online courses. In the rest of this post, I’ll give you a quick summary of how we did this, and I’ll conclude with some thoughts on the wider implications of AI entering the workplace.

What is Midjourney and AI Image Generation?

Midjourney is one of several popular AI image generation models. The other popular models are Dall-E2 (by OpenAI) and Stable Diffusion (by Stability AI). These models generate original images based on a user’s text prompt.

This has given rise to the term prompt engineering, which refers to the practice of crafting a text prompt such that the model gives you a desired output. Personally, I don't love the term ‘prompt engineering’ (as a trained structural engineer, I might be biased :) but we’ll leave that for another day!

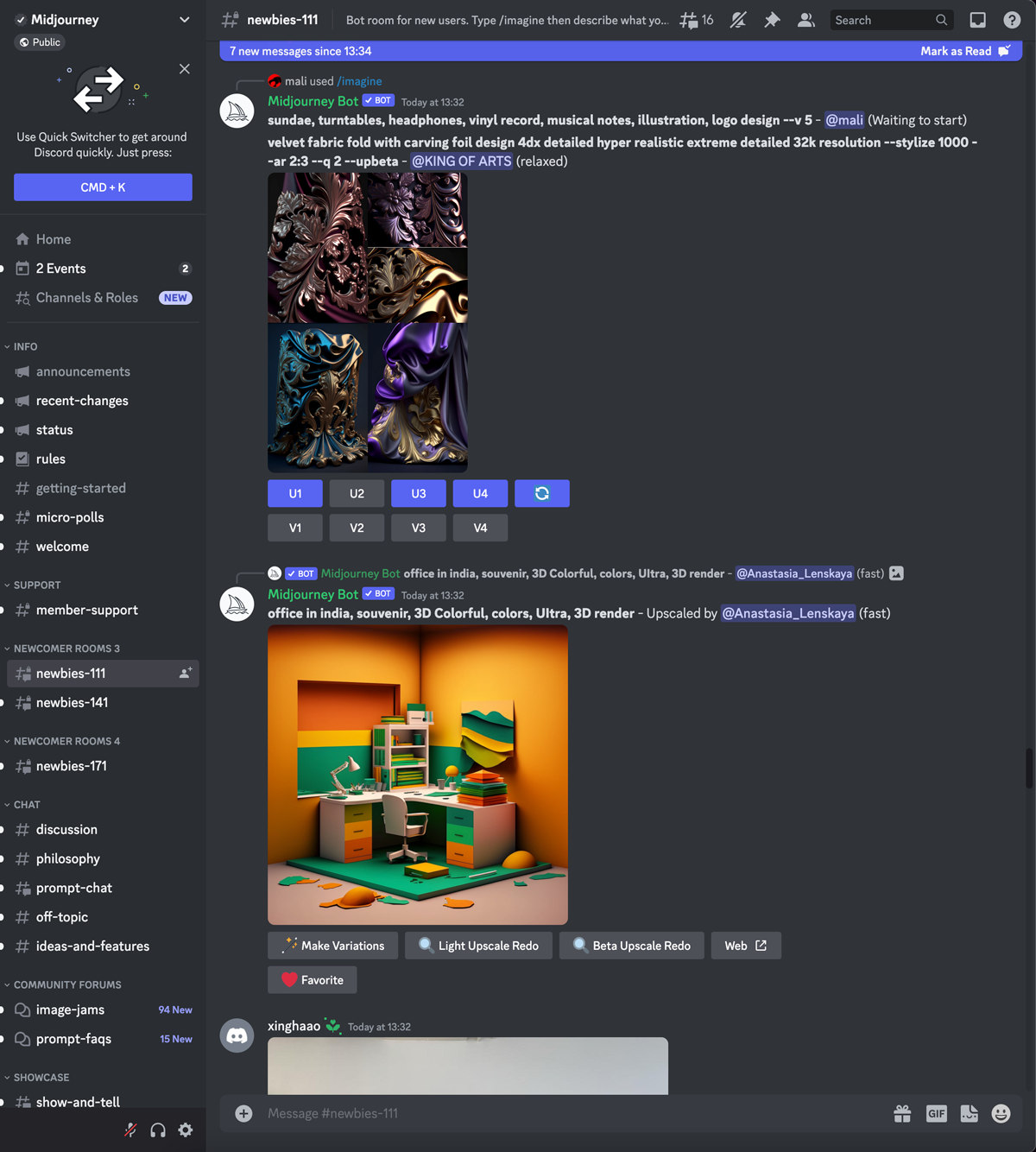

You interact with Midjourney through Discord. Essentially, you join the Midjourney server on Discord and issue image prompts to the Midjourney bot. If you’re not familiar with how Discord works, Midjourney’s getting started guide is very easy to follow.

One slightly annoying thing about using Midjourney on Discord is that your images are generated in a public channel alongside everyone else who is using the server. So, it can be hard to keep track of what’s going on.

Figure 1. A typical public channel on the Midjourney Discord server

A great hack is to set up your own server and invite the Midjourney bot (much easier than it sounds). This way, all of your images are generated in isolation and not mixed in with everyone else. Keep in mind, the images you generate are not private and will be posted (along with the prompt that generated them) on Midjourney.com. You also need a paid Midjourney account to use the images commercially.

Using Midjourney to solve a real-world problem

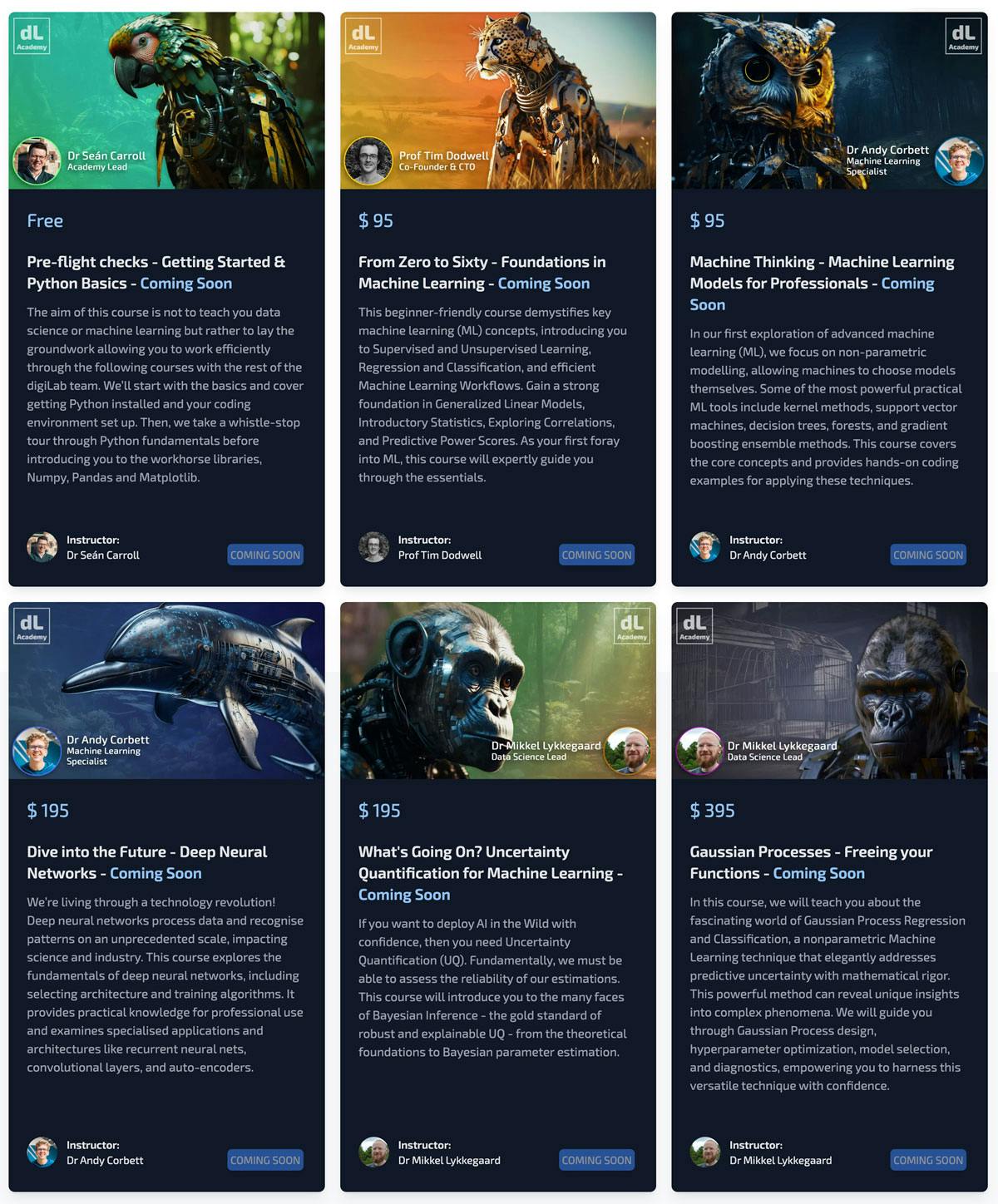

Here at digiLab Academy, we’re crafting a collection of courses to teach people all about machine learning and data science. The over-arching theme of this first collection is 'AI in the Wild' - we want to teach people to do what we do; apply machine learning techniques to messy real-world data.

As part of developing our collection, we needed some course images to give each course some identity and, frankly, to help us market the courses. We toyed with the idea of commissioning a freelance digital artist but first decided to try a DIY solution. As the in-house generative AI enthusiast, it fell to me to see what I could muster up.

The first question I grappled with was, what should a visual representation of a course on data science look like? An image filled with equations and graphs is hardly compelling, so after pondering this for far too long, I decided to lean into the ‘AI in the Wild’ theme and experiment with images of animals.

In a round-about reference to artificial intelligence, I thought some form of cyborg style creature might work. Then it was just a matter of associating an animal with each course. For this, I took direction from the course titles, so, for ‘From Zero to Sixty - Foundations in Machine Learning’ - a cheetah seemed to make sense.

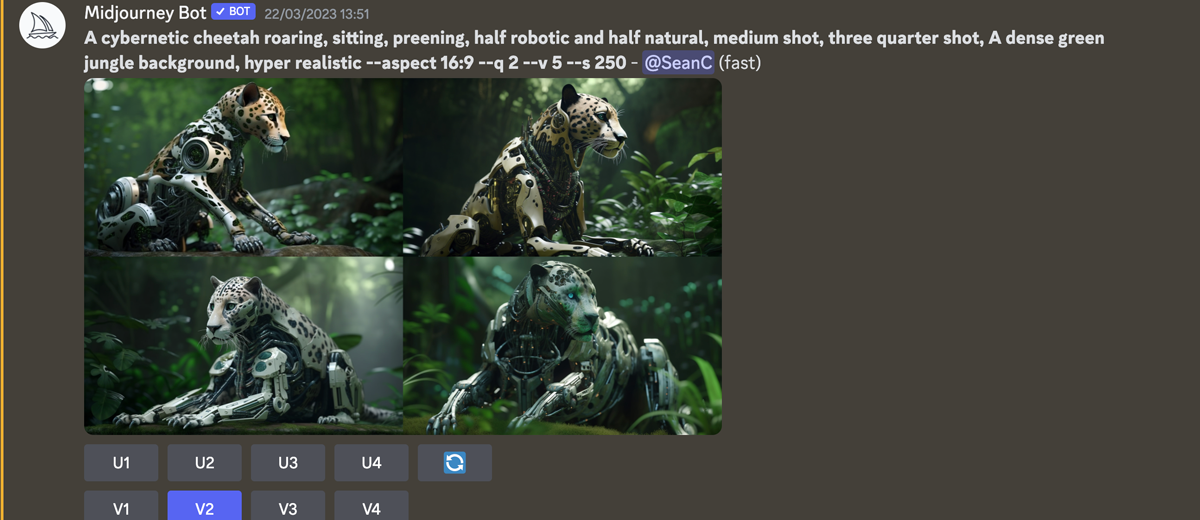

With the centrepiece of the course image loosely defined as some form of cyborg cheetah, it was time to see what I could do with Midjourney. My first prompt attempt was:

A cybernetic cheetah roaring, sitting, preening, half robotic and half natural, medium shot, three-quarter shot, a dense green jungle background, hyper-realistic.

The prompt is a mixture of natural language descriptions and keywords. You’ll notice the terms ‘medium shot’ and ‘three-quarter shot’. This is in an effort to steer the composition of the image.

Similarly, the term ‘hyper-realistic’ is used to indicate that I’d like a photo-like image rather than something more cartoony. These are not specific terms that have been documented for use with Midjourney; rather, they’re just terms that naturally came to mind when I tried describing what I wanted.

This gave me the following result:

First attempt at producing a photorealistic cyborg cheetah

Midjourney gives you four variations of the image in response to each text prompt. You can then choose which, if any, you’d like to upscale (U1-U4 buttons). You can also request four more variations of any of the original variations (V1-V4 buttons). After several more iterations, I ended up with this guy:

Figure 3. Upscaled cheetah after several iterations and prompt tweaks

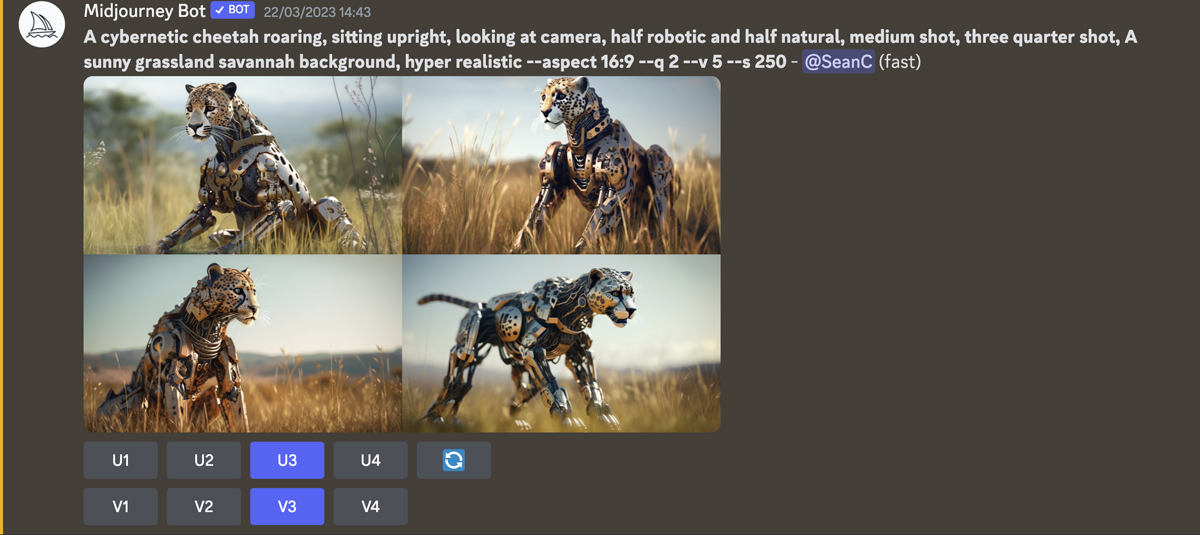

One of the great strengths of AI image generation is that you can generate so many variations with nominal additional cost. I found that arriving at something I was really happy with actually took quite a lot of iteration. It’s definitely not a ‘one and done’ process. After shopping the previous image around the office, I went at it again, this time moving from the jungle to an African savannah grassland! The following prompt:

A cybernetic cheetah roaring, sitting upright, looking at camera, half robotic and half natural, medium shot, three-quarter shot, A sunny grassland savannah background, hyper-realistic

...produced,

Figure 4. Four variations in a grassland setting

I was immediately drawn to number three in the bottom left corner and decided to call it a day there.

Figure 5. Upscaled final cheetah

One impressive feature of these generated images is their level of photorealism. This is one of the strengths of the latest version of Midjourney, V5. What really sells the photorealism is the depth of field within the image - this is an effect that can be generated in traditional photography by setting a high f-stop on your lens. The result is a sharply in-focus subject with a soft blurred background.

For a standalone composition, the image, as is, looks great. But to add more visual interest, I decided to generate an in-focus background using Midjourney and to composite these together in Photoshop. I generated a backplate image using the following prompt:

A sun-drenched, parched grassy savannah with mountains in the background, orange, yellow, hyper-realistic

This resulted in,

Figure 6. Backplate image

At this point, I had collected all of the source material needed. Now I could just composite and blend everything together in Photoshop. I won’t labour the details here, I just used standard photoshop workflows, cropping, layering, applying adjustment layers etc...basically tweaking dials and settings until I had something that looked good to me.

Figure 7. Photoshop workspace and final layer stack

Some final thoughts on generative AI

On the surface, this project was a bit of fun - an enjoyable break from my typical coding and development work here at digiLab. However, reflecting on the exercise, I’m struck with a more profound sense of what this project exemplifies - a seismic shift in how we do knowledge-based and creative work.

Could we have achieved a better result by employing a professional graphic designer? Almost certainly, yes! However, does the end result achieve what we were after - generating an eye-catching and unique visual identity for each course? Yes, I think it does. The kicker is, we could generate all of our course images in about 6-8 hours without liaising back and forth with an external contractor.

Figure 8. Complete collection of course images produced using the same techniques

For me, this is quite significant and symbolises what we’re going to see more and more as generative AI continues to improve and seep into our traditional workflows. A graphic designer didn’t get a commission to generate these images because we could do a 'good enough’ job in-house by leveraging AI. If this single example is extrapolated across many more businesses and activities, it’s not hard to forecast turbulent times ahead as labour markets adapt to this new force.

This is a particularly bitter pill to swallow for graphic designers and artists when you realise that these generative models have been trained by scraping the internet for human-generated artwork. The resulting ethical arguments over attribution and compensation are likely to rage for some time to come. All of these challenges are faced equally by programmers, I hasten to add! LLMs can write code just as well as the image models generate convincing photorealistic images.

However, we can choose to take a more optimistic view. The real power of these generative tools is on display, not when in the hands of a novice user but when they’re deployed by someone competent in the field. They become a force multiplier, enabling programmers to generate code 2-5 times faster than before. Artists and writers can equally turn out work much faster by leaning on AI tools.

So, if you’re in a field on course to be disrupted by AI, the smart move is to familiarise yourself with these new tools at your disposal, in a word, adapt. Leverage them to increase the quality and quantity of your output beyond what you could do alone. The real trap to avoid is sticking your head in the sand, hoping that somehow you’ll be able to avoid the incoming wave of disruption.

Featured Posts

If you found this post helpful, you might enjoy some of these other news updates.

Python In Excel, What Impact Will It Have?

Exploring the likely uses and limitations of Python in Excel

Richard Warburton

Large Scale Uncertainty Quantification

Large Scale Uncertainty Quantification: UM-Bridge makes it easy!

Dr Mikkel Lykkegaard

Expanding our AI Data Assistant to use Prompt Templates and Chains

Part 2 - Using prompt templates, chains and tools to supercharge our assistant's capabilities

Dr Ana Rojo-Echeburúa