![[object Object]](/_next/image?url=https%3A%2F%2Fcdn.sanity.io%2Fimages%2Fei1axngy%2Fproduction%2F1028cae66bb20dc48431544e408daf1bb5918c9d-800x800.jpg&w=256&q=75)

by Dr Andy Corbett

Updated 31 March 2023

How to Build a Digital Twin of a City

![[object Object]](/_next/image?url=https%3A%2F%2Fcdn.sanity.io%2Fimages%2Fei1axngy%2Fproduction%2Fb53fc3f87c7cd7bac60ff481ffaa139e18e95685-1000x703.png&w=2048&q=75)

We build digital twins to better make sense of complex, inter-woven, multi-headed problems.

In this post, we will walk through an exciting new venture we have been working on at digiLab: building a digital twin of a city for green energy planning, net-zero attainment and sustainability. We are calling this project twinCity.

But before thinking about how we build digital twins, let us begin at the shallow end with the whys and the whats.

What do we mean by a digital twin?

For that matter, what do we mean by AI? These terms can be plugged into all sorts of gaps, but here is what they mean to us: A digital twin is a surrogate model for a system we might find difficult to simulate directly or one which has many connected parts.

For example, we use digital twins to model the processes occurring in nuclear fusion reactors. Here, the physics-based models are so complex that it is incredibly time expensive to perform a single run. We build digital twins to emulate these dynamics to give informative predictions based on limited data. These are cheap to run, and at the same time, we return the statistical uncertainty attached to these predictions.

Figure 1. A Gaussian Process regression model used for drawing inferences with confidence intervals with just a limited number (10) of training points

These models fall under the umbrella of AI, as an algorithm has learnt to make predictions from pre-existing data. This kind of inference we call intelligent - and certainly artificial due to its algorithmic derivation. The field of AI moved forward in huge leaps in the first half of 2023, specifically in terms of AI assistance such as ChatGPT; see the brilliant application of these tools by our very own Dr Seán Carroll in this post.

Why do they make such powerful tools?

Small digital twins, such as in Fig. 1, can be applied to different aspects of a large problem. The key idea behind constructing digital twins for grand scale systems is to model small components, with bespoke techniques, and then assemble a larger system based on these functioning, cheap-to-evaluate parts.

As a large model with many component parts, we can then interrogate the conglomerate in intelligent ways using the cheap-to-run surrogate parts to inform a larger AI algorithm. For example, if we could model all the different energy demands and supplies over a whole city, a reinforcement learning algorithm can digest these predictions to suggest changes we need to make to achieve our outcomes, such as net-zero goals.

This gives us two useful outputs:

- A descriptive, easy-access model that machine learning algorithms can interrogate to assist global decision making.

- Clear data visualisations of component parts of the digital twin layer-by-layer.

Introducing: twinCity

To begin developing a large-scale digital twin of a city, we begin our journey by setting ourselves the following challenge:

Can we perform a city-wide audit of potential solar energy capture?

We are based in Exeter, a beautiful historic city with ambitious net-zero goals. Everything needs to start somewhere, and we felt this was a good starting point for our digital twin vision.

The short answer to our challenge is: yes! In Fig. 2 we display a clip of our interactive map. What have we found?

- Each structure on the map is a rooftop detected by a deep convolutional neural network.

- Each rooftop is divided into slopes. The aspect and pitch of these slopes determine the solar energy that can land there.

- The colour indicates efficiency (energy capture per square meter) with green being efficient.

- The height indicates total energy capture over a typical day. This is also proportional to the slope area.

Figure 2. A map of Exeter displaying the solar potential of all the rooftops in the region

We think of this as the first layer of our digital twin. The visual output is very useful for looking at different regions and identifying say, the most efficient rooftops there.

But also useful is to use more machine learning models to make rapid predictions and propose the best areas for solar panels given a fixed budget for installation.

This project uses all the machine learning tricks in the book which makes it an interesting tale to tell. We will use the rest of this article to sketch out how we built the model.

Computer vision and Bayesian modelling for rooftop detection

First things first: where is the data? Of course, we started with nothing. Freely available data included satellite data as well as LiDAR data - this gives a geo-spacial array, sometimes a little sparse, of coordinates across a map.

- Step one was to train a deep convolutional neural network to identify which pixels in a map are likely to be contained in a rooftop: we obtained a 'rooftop mask'.

Computer Vision Model

-

Step two was to find the contours of this rooftop mask to single out individual rooftop polygons. Then it is possible to segment the LiDAR points located within.

-

To estimate solar irradiance landing on the roof, we needed to identify all the sub-slopes of each rooftop alongside their pitch and aspect, the "south facing-ness". This is the tricky bit and we needed to get creative with our machine learning toolkit:

- We used the -nearest neighbours algorithm to group close points and analytically compute the gradients at each LiDAR point.

- We used a Bayesian Gaussian mixture, combined with PCA decompositions, to cluster like gradients.

- We fitted a 2D Bayesian linear regression to the points in each cluster to find the slope. From this, we deduced the pitch and aspect.

If that doesn't get you excited to apply machine learning, I don't know what will!

- Step four was to probabilistically evaluate a solar irradiance model to find a distribution of solar irradiance arriving on each rooftop as a function of cloud cover. This is the final output, alongside the power output which we compute knowing the area of the sub-slope.

Voila! We have a method to deduce estimations for the power and irradiance for each rooftop. And we can quantify how certain we are using the Bayesian linear model. But what can we do with this very detailed information?

Show me the optimal rooftops for solar capture

Let us see how we can flex the model to produce a useful result. We have set up an optimisation routine that prioritises total solar energy collected whilst varying the total expense on the solar panels themselves.

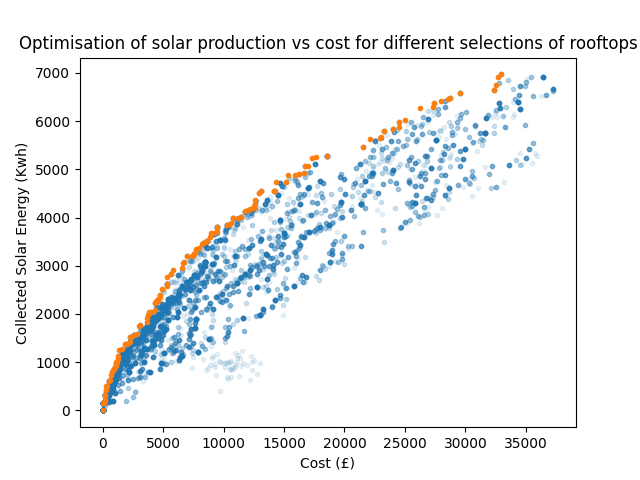

We solve this optimisation problem by setting up a genetic algorithm to interrogate our digital twin for different combinations of rooftop slopes. Inspired by the random evolutions occurring in natural selections, the most efficient combinations of rooftops are the ones that survive. Fig. 3 show an array of solutions where each scatter point represents a selection of rooftop slopes on which to place panels. The orange panels are the solutions that maximise energy collection for given total installation cost.

Figure 3. Optimal solutions to solar panel assignment to maximise total energy generated for varying spend on equipment

This is just one example of the power of digital twins: decision makers can use them as tools to find quick, detailed answers to important questions. In this case, "what is the benefit of installing an amount of solar in a given area, and where should we put the panels"? Let me know if ChatGPT can handle that one.

What next for twinCity?

This solar audit layer is just the first set on the road to net-zero for twinCity. We are currently developing an air pollution simulator, to be trained on mobile remote sensors around the city.

Expanding on local energy, we are researching the demand aspect for local sub-grids. We are developing tools to evaluate the impact of green energy solutions on these grids, including:

- Smart control of heat pumps.

- Flexible energy management.

- Storage benefits to local networks.

Modelling these aspects in a connected digital twin allows us to deploy the power of reinforcement learning in order to train agents to control systems based on a wide variety of factors - a task simply unimaginable before the AI revolution.

The ultimate dream is to drive to a new town and read on the signpost, "Ottery St. Catchpole, twinned with twinCity".

Featured Posts

If you found this post helpful, you might enjoy some of these other news updates.

Python In Excel, What Impact Will It Have?

Exploring the likely uses and limitations of Python in Excel

Richard Warburton

Large Scale Uncertainty Quantification

Large Scale Uncertainty Quantification: UM-Bridge makes it easy!

Dr Mikkel Lykkegaard

Expanding our AI Data Assistant to use Prompt Templates and Chains

Part 2 - Using prompt templates, chains and tools to supercharge our assistant's capabilities

Dr Ana Rojo-Echeburúa