![[object Object]](/_next/image?url=https%3A%2F%2Fcdn.sanity.io%2Fimages%2Fei1axngy%2Fproduction%2F3a4ea834e9e76123cb06feba2125a9656ec6f097-450x450.jpg&w=256&q=75)

by Dr Seán Carroll

Updated 26 April 2023

LangChain - A Toolbox for Supercharging Large Language Models

![[object Object]](/_next/image?url=https%3A%2F%2Fcdn.sanity.io%2Fimages%2Fei1axngy%2Fproduction%2F212c03688e44dbd08d00337d0c0c2d61ef18ed7d-800x392.jpg&w=2048&q=75)

We’re living in the future! In the span of six months, we’ve gone from having our minds blown by the power of ChatGPT to a world where the average person, with just a sprinkling of Python (or Javascript if that's your flavour), can build their own custom semi-autonomous agents.

Like kitting out your favourite videogame character, these AI-powered agents can be equipped with a range of tools, giving them additional abilities, or to continue the video game analogy - power boosts! In this tutorial, I’ll give an introduction to the framework that’s making all of this possible - LangChain.

🦜🔗 LangChain...to LLMs what NumPy is to Arrays

LangChain is a framework or library for working with Large Language Models (LLMs). It packages up a whole host of tools that speeds up the process of building interesting workflows around LLMs.

Anyone familiar with development in any language will be familiar with using third-party libraries to speed up their development - if you're a Python dev, think about LangChain as the NumPy or Pandas for working with LLMs.

We’ve all seen the chatbot-style back-and-forth interaction between a language model and a user. If you’re comfortable writing some basic code, you may have even achieved this programmatically using an API.

Once you start interacting with these foundational models via their APIs, you'll find that your gears start turning on what’s possible in terms of different tools and user experiences that could be built. This is where the LangChain library comes in handy - instead of building everything from scratch, you can use the helper classes and abstractions already available within the library.

LangChain’s 3 big concepts

The library's list of features and tools is growing rapidly, but fundamentally, LangChain introduces three new concepts - we’ll consider each below.

Prompt templates:

These are exactly what they sound like - templates for interacting with LLMs. They provide a shortcut for initiating a specific type of interaction with your LLM of choice. We can use pre-defined prompt templates that work straight out of the box or build our own custom ones.

Chains

Chains are based on the concept of sequentially linking together multiple elements or building blocks to form a repeatable workflow. In a simple example, we might chain together a prompt template and language model so that we can feed in user input at one end, inject it into a template to format it and better contextualise it and then feed that template to the LLM. To go one step further, we might have our LLM prepare the input for a further link in the chain that reaches out and performs a web search on Google.

Agents

For me, this is where things get almost spooky. I know it’s probably not a good idea to anthropomorphise these tools and the underlying models. My hardcore machine-learning colleagues would definitely frown on it - but it’s fun…so bear with me. An agent is like a mini-worker. You can give them a brain (I know, I know - it’s not a brain, but just go with it) in the form of an LLM; typically, OpenAI’s GPT-3.5 (ChatGPT) works very well.

Then we equip our worker with a toolbox, and into this toolbox, we might put the ability to search the web or the ability to write and execute code. Then we can give our agent a task and watch the magic happen as it works its way through the task - step by step in an almost human-like fashion. Agents are the closest thing we have right now to customised mini-me style workers that we can instantiate and deploy on whatever task we don’t want to spend time personally doing.

If you really want to have your mind blown - look up AutoGPT to see where the autonomous agent idea has evolved. We’ll take a deeper dive in AutoGPT in another post.

The code - getting hands-on with LangChain

This is still very abstract so far. Let’s get into the details and see some basic examples of how we can put LangChain to work. We’ll keep it pretty light in this tutorial and just introduce the basics - we have a bigger build-along project coming soon. At any point, if you want to dig a little deeper, just head over to the LangChain docs.

I’ll be working in a Juyter Notebook using Python - if you want to code along, you can use raw Python script files if you prefer. Keep in mind that you’ll need your own API keys - I’ll flag this up again when we get there.

The basic PromptTemplate

Let’s start simple and take a look at Prompt templates. Prompt templates become particularly helpful when we don’t want to hard-code a prompt or type one from scratch every time. They’re most helpful when we have a certain style or type of model interaction we want to execute repeatedly, with minor changes each time. One place that this becomes very helpful is when building end-user experiences where you want to constrain the user to one type of interaction. This will become clearer as we see some examples.

First, we need to install langchain and while we’re at it, we can install openai. Type the following commands into your terminal:

pip install langchain

pip install openai

Now we can import the PromptTemplate class from langchain and define a simple prompt instance.

from langchain import PromptTemplate

#Define prompt template with one input variable

prompt = PromptTemplate(

input_variables=["topic"],

template="Please explain how a {topic} works and give me some example",

)

Notice that the template behaves as a Python f-string with the input variables injected into the string. Now we can call this prompt using its format method:

promptForLLM = prompt.format(topic="neural network")

print(result)

…which yields, Please explain how a neural network works and give me some example.

Provide more context with a FewShotPromptTemplate

Remember, we’re creating a template to help build a prompt that will be fed into an LLM. One way we can get a better response from an LLM is to give it some examples of the type of response we’re looking for. This is where the FewShotPromptTemplate comes in.

We can start by importing the FewShotPromptTemplate class and defining a list of examples to give the LLM an idea of what we’re looking for.

from langchain import FewShotPromptTemplate

# A list of illustrative examples.

examples = [

{

"language": "Python",

"description": "A versatile, easy-to-learn programming language widely used for web development, data analysis, artificial intelligence, and more."

},

{ "language": "JavaScript",

"description": "A dynamic scripting language primarily used for client-side web development to enable interactivity and enhance user experience."

},

]

So, we’re defining a language and an associated description. The inference the model should make here is that when some new language is provided in a prompt, we’d like a short description as the response.

The next step is to take the list of dictionaries, examples, defined above and build a template that will transform each raw example dictionary and structure it into the format that the LLM will receive. We can use the PromptTemplate class again for this.

examplePrompt = PromptTemplate(

input_variables=["language", "description"],

template="""

Language: {language}

Short description: {description} \n

"""

)

Finally, we can combine examples and examplePrompt into the final few shot prompt using the FewShotPromptClass.

prompt = FewShotPromptTemplate(

examples=examples,

example_prompt=examplePrompt,

prefix="Give a short description of the following programming languages: \n",

suffix="Language: {language}\nDescription:",

input_variables=["language"],

example_separator="\n",

)

prefix is a string that we can add to the start of our prompt, suffix is a string we can add to the end of our prompt - this is where we suggest to the LLM that we’d like a response! Now we can call the format method on our new prompt and pass in an input variable,

promptForLLM = prompt.format(language="Rust")

print(promptForLLM)

This outputs the following prompt.

Give a short description of the following programming languages:

Language: Python

Short description: A versatile, easy-to-learn programming language widely used for web development, data analysis, artificial intelligence, and more.

Language: JavaScript

Short description: A dynamic scripting language primarily used for client-side web development to enable interactivity and enhance user experience.

Language: Rust

Description:

Once we pass this to a LLM we should expect a short description of the Rust programming language as the response.

Using Chains to String tools together

At this point, there’s a fair chance you’re scratching your head thinking, that’s an awful lot of code to write for a short prompt! You’re not wrong - this is a lot of work just to build the prompt. But remember, we’re trying to systematise our workflow so that we get higher quality, repeatable results - prompt templates deliver this.

The power of prompt templates really starts to come into focus when we incorporate them into chains. So let’s build a simple chain to send our prompt to ChatGPT and get a response.

Since I’ll be using ChatGPT, the first thing I’ll need is an OpenAI API key. There are other open-source options you can select from this list). They all work the same way with the LangChain LLM class, so feel free to swap in another model if you prefer.

Once you have your API key, the most convenient thing to do is set it as a local environment variable and forget about it.

import os

os.environ["OPENAI_API_KEY"] = "your-key-goes-here"

Next, we can import a wrapper from LangChain that takes care of the details of interacting with OpenAI’s API. Note that we’re specifically importing the wrapper for interacting with OpenAI’s chat models.

from langchain.chat_models import ChatOpenAI

Now we can instantiate our wrapper as openaichat - within our LangChain workflow, the openaichat variable will be how we pass around our LLM.

openaichat = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0.9)

Here we've specified the name of the model we want to use and the temperature parameter which loosely speaking, controls the randomness of the generating text. The closer the temperature is to 1, the more fruity the response while the closer it is to zero, the more deterministic and focused the response is.

LangChain has a number of different chain types we can work with but the simplest is the LLMChain class which allows us to specify an LLM and a prompt. We can import the LLMChain class and instantiate a chain with our openaichat model and the few shot template we defined above.

from langchain.chains import LLMChain

#Create a simple chain with chat model and few shot prompt template

chain = LLMChain(llm=openaichat, prompt=prompt)

Now we can call the chain and simply pass it a single argument that will be passed on to our prompt template. So, let’s say I want a short description of the Rust programming language, I can execute,

chain.run("Rust")

This will trigger a call to OpenAI and pass our formatted prompt that has Rust injected into it. In my case, this yields the following response:

A modern systems programming language designed for speed, reliability, and memory safety. Rust is known for its strong type system, efficient memory usage, and ability to prevent common programming errors. It is often used for developing operating systems, network servers, and other high-performance applications.

Nice! Possibly a little long and the model isn’t respecting our implied suggestion to only return one line…but we can iterate on the prompt from here to improve the response.

Hopefully, at this stage, you’re starting to get a general sense of the LangChain workflow and how prompts and chains work. Next, we’ll step things up a gear with Agents!

Agents - Unleashing your LLM on the world!

I’ve hinted at the power of agents already in this article and we’ve been slowly building up to them with PromptTemplates and Chains. Now we can pour some petrol on the fire and introduce agents properly!

Agents essentially give us the ability to trigger different chains, based on user input. In addition, we can equip our agent with different tools that it will autonomously decide to use based on what we’ve asked it to do. Now we’re starting to get into a realm where you need to be somewhat careful because tools give your agent the ability to interact with the outside world!

You can create your own custom tools, but the list of plug and play tools that LangChain provides wrappers for is always expanding. At the time of writing the list looks like this:

- Apify

- Arxiv API

- Bash

- Bing Search

- ChatGPT Plugins

- DuckDuckGo Search

- Google Places

- Google Search

- Google Serper API

- Gradio Tools

- Human as a tool

- IFTTT WebHooks

- OpenWeatherMap API

- Python REPL

- Requests

- Search Tools

- SearxNG Search API

- SerpAPI

- Wikipedia API

- Wolfram Alpha

- Zapier Natural Language Actions API

This list is arranged alphabetically, so the most exciting tool is right at the bottom! Your agents now have the ability to communicate via Zapier with over 5000 different applications across the web!

We’ll have a more in-depth build-along project out soon that looks at building agents, so for now, we’ll just demo the basic workflow and build a simple agent that can search the web and perform basic math more reliably than the typical LLM models.

I’ll be using SerpAPI tool to query Google’s search index. You can sign up for an API key and get started for free. Again, I’ll store my API key as an environment variable.

os.environ["SERPAPI_API_KEY"] = "your-key-goes-here"

Next, we can import the relevant classes for working with tools and agents.

from langchain.agents import load_tools, initialize_agent

We then define a new OpenAI LLM, load up some tools by passing their string identifiers along with the LLM into load_tools :

llm = OpenAI(temperature=0.0)

tools = load_tools(['serpapi', 'llm-math'], llm=llm)

Now we can instantiate a new agent with these tools and specify which type of agent we want from those available in LangChain. We’ll use a zero-shot-react-description agent which can work with any number of tools and decides which to use based on the current task and each tool’s description.

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose = True)

I’ve specified verbose=True here so that we can see the chain of thought that the agent goes through as they process our prompt.

Now we’re ready to give our agent a prompt - something that will require it to search the web for an answer and then do some math on what it finds. This example is a little contrived for demonstration purposes, but let’s ask it…

What is the age of the current president of the United States multiplied by the age of the current Prime Minister of the United Kingdom?

To deal with this it will need to work out the current President and Prime Minister - the key here is that the Prime Minister has changed since OpenAI’s training cut-off date. So if we feed this query straight into ChatGPT, we’ll get…

As of my knowledge cutoff of September 2021, the current President of the United States was Joe Biden, who was born on November 20, 1942. The current Prime Minister of the United Kingdom was Boris Johnson, who was born on June 19, 1964.

Therefore, as of September 2021, the age of Joe Biden would have been 79 years old, and the age of Boris Johnson would have been 57 years old. Multiplying these ages together gives:

79 x 57 = 4,503

This isn’t wrong - it even got the calculation right. But it’s not current. Our agent on the other hand, will give a more up-to-date answer when we run it.

agent.run("What is the age of the current president of the United States multiplied by the age of the current Prime Minister of the United Kingdom?")

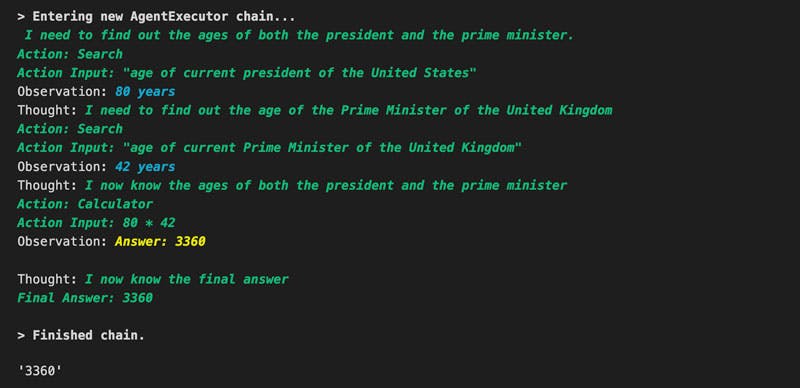

This results in the following chain of thought which ultimately gives the correct answer, Fig 1.

Figure 1. LangChain agent chain of thought as it processes the prompt using a multiple tools

This is obviously just a toy example thrown together pretty quickly. Still, we can see the power and potential of agents, particularly if they’re carefully constructed and tuned to perform a particular task.

The innovation here is the ability of the agent to chain together multiple iterations of observation, thought, and action and have the outputs of one cycle feed the next cycle. With an LLM as the interpretation layer, the agent can, with a relatively well-defined problem, deliver human-like performance.

With this type of functionality, repetitive and formulaic tasks can be offloaded to your own private army of agents. We’ve already seen this type of automation with services like Zapier and Make.com offering the ability to build task automation workflows. With the addition of LLMs, the degree of automation can be taken to a whole new level. We’re starting to see this happen with tools like AutoGPT.

If you found this whistlestop tour of LangChain interesting, we’ll be releasing more how-to and code-along projects here on the digiLab Academy site over the next few months - so consider joining our mailing list to get notified when we publish new content.

Featured Posts

If you found this post helpful, you might enjoy some of these other news updates.

Python In Excel, What Impact Will It Have?

Exploring the likely uses and limitations of Python in Excel

Richard Warburton

Large Scale Uncertainty Quantification

Large Scale Uncertainty Quantification: UM-Bridge makes it easy!

Dr Mikkel Lykkegaard

Expanding our AI Data Assistant to use Prompt Templates and Chains

Part 2 - Using prompt templates, chains and tools to supercharge our assistant's capabilities

Dr Ana Rojo-Echeburúa